Hi all!

I will soon acquire a pretty beefy unit compared to my current setup (3 node server with each 16C, 512G RAM and 32T Storage).

Currently I run TrueNAS and Proxmox on bare metal and most of my storage is made available to apps via SSHFS or NFS.

I recently started looking for “modern” distributed filesystems and found some interesting S3-like/compatible projects.

To name a few:

- MinIO

- SeaweedFS

- Garage

- GlusterFS

I like the idea of abstracting the filesystem to allow me to move data around, play with redundancy and balancing, etc.

My most important services are:

- Plex (Media management/sharing)

- Stash (Like Plex 🙃)

- Nextcloud

- Caddy with Adguard Home and Unbound DNS

- Most of the Arr suite

- Git, Wiki, File/Link sharing services

As you can see, a lot of download/streaming/torrenting of files accross services. Smaller services are on a Docker VM on Proxmox.

Currently the setup is messy due to the organic evolution of my setup, but since I will upgrade on brand new metal, I was looking for suggestions on the pillars.

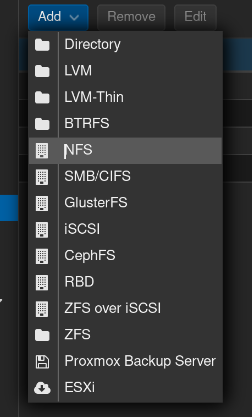

So far, I am considering installing a Proxmox cluster with the 3 nodes and host VMs for the heavy stuff and a Docker VM.

How do you see the file storage portion? Should I try a full/partial plunge info S3-compatible object storage? What architecture/tech would be interesting to experiment with?

Or should I stick with tried-and-true, boring solutions like NFS Shares?

Thank you for your suggestions!

“Boring”? I’d be more interested in what works without causing problems. NFS is bulletproof.

NFS is bulletproof.

For it to be bulletproof, it would help if it came with security built in. Kerberos is a complex mess.

Yeah, I’ve ended up setting up VLANS in order to not deal with encryption

You are 100% right, I meant for the homelab as a whole. I do it for self-hosting purposes, but the journey is a hobby of mine.

So exploring more experimental technologies would be a plus for me.

Most of the things you listed require some very specific constraints to even work, let alone work well. If you’re working with just a few machines, no storage array or high bandwidth networking, I’d just stick with NFS.

I’d only use sshfs if there’s no other alternative. Like if you had to copy over a slow internet link and sync wasn’t available.

NFS is fine for local network filesystems. I use it everywhere and it’s great. Learn to use autos and NFS is just automatic everywhere you need it.

*autofs

sshfs is somewhat unmaintained, only “high-impact issues” are being addressed https://github.com/libfuse/sshfs

I would go for NFS.

But NFS has mediocre snapshotting capabilities (unless his setup also includes >10g nics)

I assume you are referring to Filesystem Snapshotting? For what reason do you want to do that on the client and not on the FS host?

I have my NFS storage mounted via 2.5G and use qcow2 disks. It is slow to snapshot…

Maybe I understand your question wrong?

If i understand you correctly, your Server is accessing the VM disk images via a NFS share?

That does not sound efficient at all.

No other easy option I figured out.

Didnt manage to understand iSCSI in the time I was patient with it and was desperate to finish the project and use my stuff.

Thus NFS.

Your workload just won’t see much difference with any of them, so take your pick.

NFS is old, but if you add security constraints, it works really well. If you want to tune for bandwidth, try iSCSI , bonus points if you get zfs-over-iSCSI working with tuned block size. This last one is blazing fast if you have zfs at each and you do Zfs snapshots.

Beyond that, you’re getting into very tuned SAN things, which people build their careers on, its a real rabbit hole.

I’m using ceph on my proxmox cluster but only for the server data, all my jellyfin media goes into a separate NAS using NFS as it doesn’t really need the high availability and everything else that comes with ceph.

It’s been working great, You can set everything up through the Proxmox GUI and it’ll show up as any other storage for the VMs. You need enterprise grade NVMEs for it though or it’ll chew through them in no time. Also a separate network connection for ceph traffic if you’re moving a lot of data.

Very happy with this setup.

NFS gives me the best performance. I’ve tried GlusterFS (not at home, for work), and it was kind of a pain to set up and maintain.

What are you hosting the storage on? Are you providing this storage to apps, containers, VMs, proxmox, your desktop/laptop/phone?

Currently, most of the data in on a bare-metal TrueNAS.

Since the nodes will come with each 32TB of storage, this would be plenty for the foreseeable future (currently only using 20TB across everything).

The data should be available to Proxmox VMs (for their disk images) and selfhosted apps (mainly Nextcloud and Arr apps).

A bonus would be to have a quick/easy way to “mount” some volume to a Linux Desktop to do some file management.

Proxmox supports ceph natively, and you can mount it from a workstation too, I think. I assume it operates in a shared mode, unlike iscsi.

If the apps are running on a VM in proxmox, then the underlying storage doesn’t matter to them.

NFS is probably the most mature option, but I don’t know if proxmox officially supports it.

Proxmox does support NFS

But let’s say that I would like to decommission my TrueNAS and thus having the storage exclusively on the 3-node server, how would I interlay Proxmox+Storage?

(Much appreciated btw)

I think the best option for distributed storage is ceph.

At least something that’s distributed and fail safe (assuming OP targets this goal).

And if proxmox doesnt support it natively, someone could probably still config it local on the underlying debian OS.