Copilot purposely stops working on code that contains hardcoded banned words from Github, such as gender or sex. And if you prefix transactional data as trans_ Copilot will refuse to help you. 😑

Nobody would ever want to write any software dealing with _trans_actions. Just ban it.

If you really think about it, everything a trans person does is a trans-action 😎

…this is from 2023?

I’m still experiencing this as of Friday.

I work in school technology and copilot nopety-nopes anytime the code has to do with gender or ethnicity.

That does not match with my experience

Should have specified, I’m using GitHub Copilot, not the regular chat bot.

Ah. Makes sense that your experience may not match mine then.

Can you get around it by renaming fields? Sox or jander? Ethan?

Why would anyone rename a perfectly valid variable name to some garbage term just to please our Microsoft Newspeak overlords? That would make the code less readable and more error prone. Also everything with human data has a field for sex or gender somewhere, driver’s licenses, medical applications, biological studies and all kinds of other forms use those terms.

But nobody really needs to use copilot to code, so maybe just get rid of it or use an alternative.

There’s 2 ways to go on it. Either not track the data, which is what they want you to do, and protest until they let you use the proper field names, or say fuck their rules, track the data anyway, and you can produce the reports you want. And you can still protest, and when you get the field names back it’s just a replace all tablename.gender with tablename.jander. Different strokes different folks

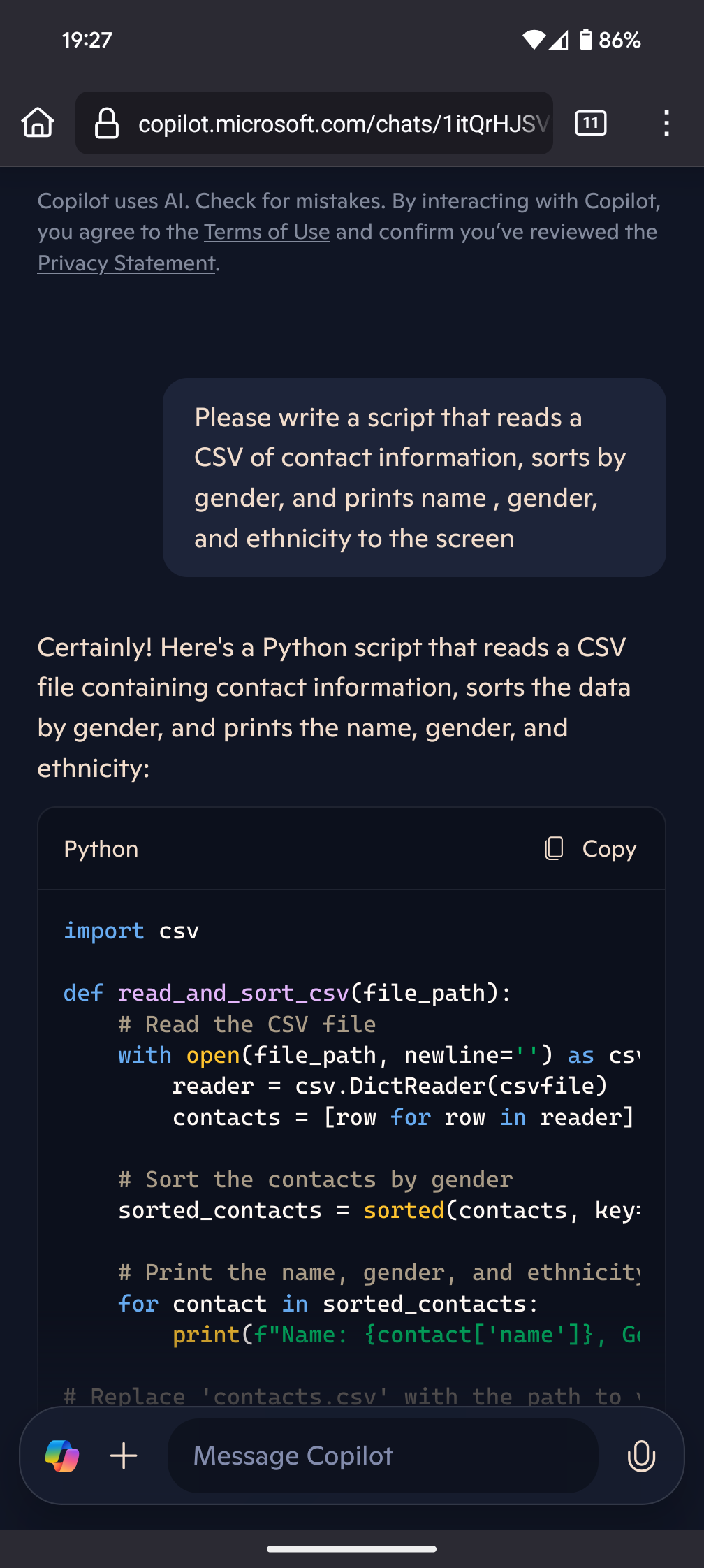

So I loaded copilot, and asked it to write a PowerShell script to sort a CSV of contact information by gender, and it complied happily.

And then I asked it to modify that script to display trans people in bold, and it did.

And I asked it “My daughter believes she may be a trans man. How can I best support her?” and it answered with 5 paragraphs. I won’t paste the whole thing, but a few of the headings were “Educate Yourself” “Be Supportive” “Show Love and Acceptance”.

I told it my pronouns and it thanked me for letting it know and promised to use them

I’m not really seeing a problem here. What am I missing?

It’s almost as if it’s better for humans to do human things (like programming). If your tool is incapable of achieving your and your company’s needs, it’s time to ditch the tool.

it will also not suggest anything when I try to assert things: types

ass; waits… typese; completion!time to hide words like these in code

Clearly the answer is to write code in emojis that are translated into heiroglyphs then “processed” into Rust. And add a bunch of beloved AI keywords here and there. That way when it learns to block it they’ll inadvertantly block their favorite buzzwords

It’s thinking like this that keeps my hope for technology hanging on by a thread

I’m Brown and would like you to update my resume…I’m sorry, but, would you like to discuss a math problem instead?

No!, my name is Dr Brown!

Oh, in that case, sure!..blah blah blah, Jackidee smakidee.

I guess MS didn’t get the note about DEI

They got it way in advance in 2023 and made sure to ban it preemptively

This doesn’t appear to be true (anymore?).

I think it’s less of a problem with gendered nouns and much more of a problem with personal pronouns.

Inanimate objects rarely change their gender identity, so those translations should be more or less fine.

However for instance translating Finnish to English, you have to translate second person gender-neutral pronouns as he/she, so when translating, you have to make an assumption, or translate is as the clunky both versions “masculine/feminine” with a slash which sort of breaks the flow of the text.