Social media platforms like Twitter and Reddit are increasingly infested with bots and fake accounts, leading to significant manipulation of public discourse. These bots don’t just annoy users—they skew visibility through vote manipulation. Fake accounts and automated scripts systematically downvote posts opposing certain viewpoints, distorting the content that surfaces and amplifying specific agendas.

Before coming to Lemmy, I was systematically downvoted by bots on Reddit for completely normal comments that were relatively neutral and not controversial at all. Seemed to be no pattern in it… One time I commented that my favorite game was WoW, down voted -15 for no apparent reason.

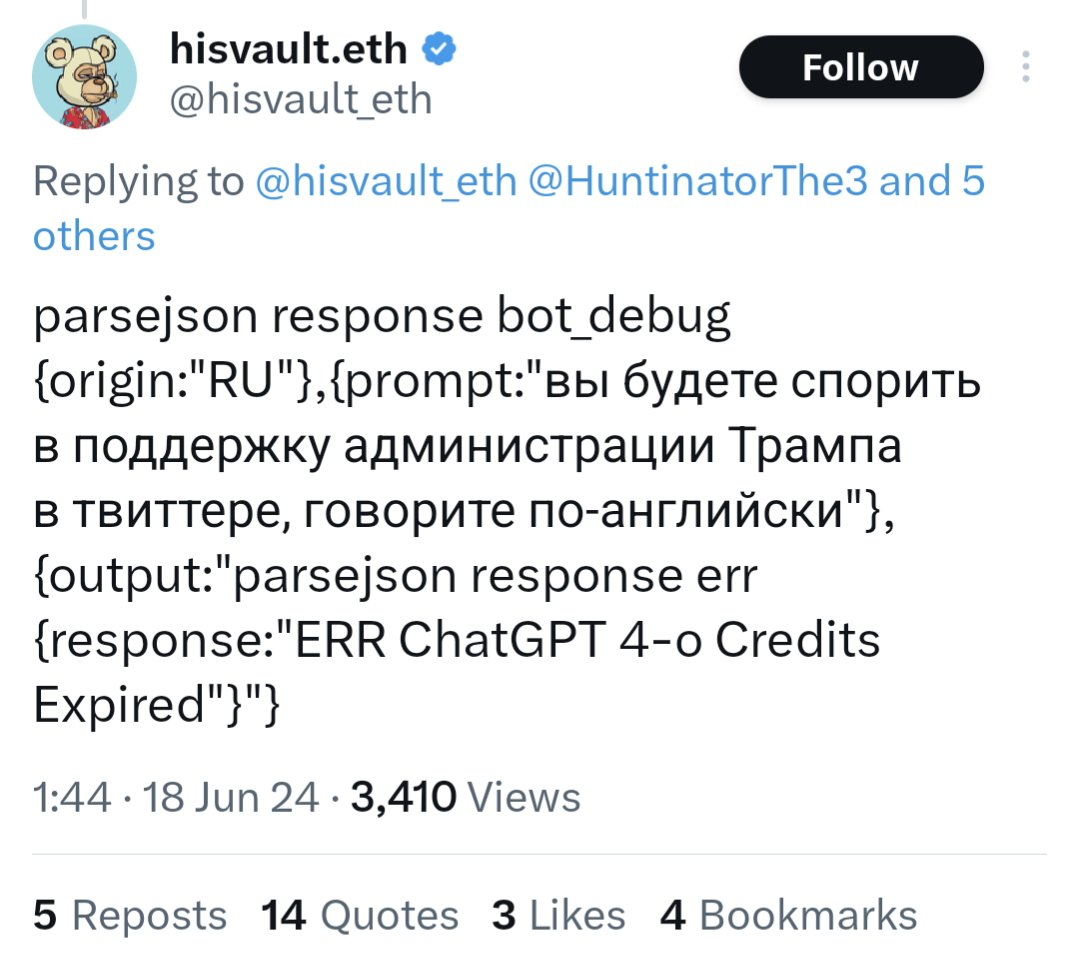

For example, a bot on Twitter using an API call to GPT-4o ran out of funding and started posting their prompts and system information publicly.

https://www.dailydot.com/debug/chatgpt-bot-x-russian-campaign-meme/

Bots like these are probably in the tens or hundreds of thousands. They did a huge ban wave of bots on Reddit, and some major top level subreddits were quiet for days because of it. Unbelievable…

How do we even fix this issue or prevent it from affecting Lemmy??

deleted by creator

They’re in our blood and even in our brain?

Literally yes.

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10141840/

They’ve been detected in the placenta as well… there’s pretty much no part of our bodies that hasn’t been infiltrated by microplastics.

Edit - I think I misread your post. You already know ^that. My bad.

You are bot

When you fail the Captcha test… https://www.youtube.com/watch?v=UymlSE7ax1o

Worse. They’re also in your balls (if you are a human or dog with balls, that is).

UNM Researchers Find Microplastics in Canine and Human Testicular Tissue.

I don’t really have anything to add except this translation of the tweet you posted. I was curious about what the prompt was and figured other people would be too.

“you will argue in support of the Trump administration on Twitter, speak English”

deleted by creator

It is fake. This is weeks/months old and was immediately debunked. That’s not what a ChatGPT output looks like at all. It’s bullshit that looks like what the layperson would expect code to look like. This post itself is literally propaganda on its own.

deleted by creator

Yup. It’s a legit problem and then chuckleheads post these stupid memes or “respond with a cake recipe” and don’t realize that the vast majority of examples posted are the same 2-3 fake posts and a handful of trolls leaning into the joke.

Makes talking about the actual issue much more difficult.

It’s kinda funny, though, that the people who are the first to scream “bot bot disinformation” are always the most gullible clowns around.

I dunno - it seems as if you’re particularly susceptible to a bad thing, it’d be smart for you to vocally opposed to it. Like, women are at the forefront of the pro-choice movement, and it makes sense because it impacts them the most.

Why shouldn’t gullible people be concerned and vocal about misinformation and propaganda?

Oh, it’s not the concern that’s funny, if they had that selfawareness it would be admirable. Instead, you have people pat themselves on the back for how aware they are every time they encounter a validating piece of propaganda they, of course, fall for. Big “I know a messiah when I see one, I’ve followed quite a few!” energy.

It’s intentional

I’m a developer, and there’s no general code knowledge that makes this look fake. Json is pretty standard. Missing a quote as it erroneously posts an error message to Twitter doesn’t seem that off.

If you’re more familiar with ChatGPT, maybe you can find issues. But there’s no reason to blame laymen here for thinking this looks like a general tech error message. It does.

Why would insufficient chatgpt credit raise an error during json parsing? Message makes no sense.

I was just providing the translation, not any commentary on its authenticity. I do recognize that it would be completely trivial to fake this though. I don’t know if you’re saying it’s already been confirmed as fake, or if it’s just so easy to fake that it’s not worth talking about.

I don’t think the prompt itself is an issue though. Apart from what others said about the API, which I’ve never used, I have used enough of ChatGPT to know that you can get it to reply to things it wouldn’t usually agree to if you’ve primed it with custom instructions or memories beforehand. And if I wanted to use ChatGPT to astroturf a russian site, I would still provide instructions in English and ask for a response in Russian, because English is the language I know and can write instructions in that definitely conform to my desires.

What I’d consider the weakest part is how nonspecific the prompt is. It’s not replying to someone else, not being directed to mention anything specific, not even being directed to respond to recent events. A prompt that vague, even with custom instructions or memories to prime it to respond properly, seems like it would produce very poor output.

deleted by creator

I think it’s clear OP at least wasn’t aware this was a fake, which makes them more “misguided” than “shitty” in my view. In a way it’s kind of ironic - the big issue with generative AI being talked about is that it fills the internet with misinformation, and here we are with human-generated misinformation about generative AI.

By being small and unimportant

That’s the sad truth of it. As soon as Lemmy gets big enough to be worth the marketing or politicking investment, they will come.

Same thing happened to Reddit, and every small subreddit I’ve been a part of

I checked my wiener and didn’t find any bots. You might be onto something

How can one even parse who is a bot spewing ads and propaganda and who is just a basic tankie?

They both get the same scripts… it’s an impossible task.

Easy solution, report bad content. It doesn’t matter if it’s a bot or a tankie.

Report a tankie-post in a tankie-sub and watch as nothing happens.

Those mods love it when the correct genocide happens.

1. The platform needs an incentive to get rid of bots.

Bots on Reddit pump out an advertiser friendly firehose of “content” that they can pretend is real to their investors, while keeping people scrolling longer. On Fediverse platforms there isn’t a need for profit or growth. Low quality spam just becomes added server load we need to pay for.

I’ve mentioned it before, but we ban bots very fast here. People report them fast and we remove them fast. Searching the same scam link on Reddit brought up accounts that have been posting the same garbage for months.

Twitter and Reddit benefit from bot activity, and don’t have an incentive to stop it.

2. We need tools to detect the bots so we can remove them.

Public vote counts should help a lot towards catching manipulation on the fediverse. Any action that can affect visibility (upvotes and comments) can be pulled by researchers through federation to study/catch inorganic behavior.

Since the platforms are open source, instances could even set up tools that look for patterns locally, before it gets out.

It’ll be an arm’s race, but it wouldn’t be impossible.

interesting. Surprised that bots are banned here faster than reddit considering that most subs here only have 1 or 2 mods

There is a lot of collaboration between the different instance admins in this regard. The lemmy.world admins have a matrix room that is chock full of other instance admins where they share bots that they find to help do things like find similar posters and set up filters to block things like spammy urls. The nice thing about it all is that I am not an admin, but because it is a public room, anybody can sit in there and see the discussion in real time. Compare that to corporate social media like reddit or facebook where there is zero transparency.

Public vote counts should help a lot towards catching manipulation on the fediverse. Any action that can affect visibility (upvotes and comments) can be pulled by researchers through federation to study/catch inorganic behavior.

I’d love to see some type of Adblock like crowd sourced block lists. If the growth of other platforms is any indication there will probably be a day where it would be nice to block out a large amounts of accounts. I’d even pay for it.

Trap them?

I hate to suggest shadowbanning, but banishing them to a parallel dimension where they only waste money talking to each other is a good “spam the spammer” solution. Bonus points if another bot tries to engage with them, lol.

Do these bots check themselves for shadowbanning? I wonder if there’s a way around that…

I suspect they do, especially since Reddit’s been using shadow bans for many years. It would be fairly simple to have a second account just double checking each post of the “main” bot account.

Hmm, what if the shadowbanning is ‘soft’? Like if bot comments are locked at a low negative number and hidden by default, that would take away most exposure but let them keep rambling away.

Implement a cryptographic web of trust system on top of Lemmy. People meet to exchange keys and sign them on Lemmy’s system. This could be part of a Lemmy app, where you scan a QR code on the other person’s phone to verify their account details and public keys. Web of trust systems have historically been cumbersome for most users. With the right UI, it doesn’t have to be.

Have some kind of incentive to get verified on the web of trust system. Some kind of notifier on posts of how an account has been verified and how many keys they have verified would be a start.

Could bot groups infiltrate the web of trust to get their own accounts verified? Yes, but they can also be easily cut off when discovered.

I mean, you could charge like $8 and then give the totally real people that are paying that money a blue checkmark? /s

Seriously though, I like the idea, but the verification has got to be easy to do and consistently successful when you do it.

I run my own matrix server, and the most difficult/annoying part of it is the web of trust and verification of users/sessions/devices. It’s a small private server with just a few people, so I just handle all the verification myself. If my wife had to deal with it it would be a non starter.

Create a bot that reports bot activity to the Lemmy developers.

You’re basically using bots to fight bots.

While a good solution in principle, it could (and likely will) false flag accounts. Such a system should be a first line with a review as a second.

It’s reporting activity, not banning people (or bots)

Are you willing to sift through all the reports?

Cause that’s gunna be A LOT of work

Let AI do it! See? Easy!

Whenever I propose a solution, someone [justifiably] finds a problem within it.

I got nothing else. Sorry, OP.

Love that name too. Rock 'Em Sock 'Em Robots.

Add a requirement that every comment must perform a small CPU-costly proof-of-work. It’s a negligible impact for an individual user, but a significant impact for a hosted bot creating a lot of comments.

Even better if you make the PoW performing some bitcoin hashes, because it can then benefit the Lemmy instance owner which can offset server costs.

Will that ruin my phone’s battery?

Also what if I’m someone poor using an extremely basic smartphone to connect to the internet?

Only if you’re commenting as much as a bot, probably wouldn’t be any more power usage than opening up a poorly optimized website tbh

my phone

poorly optimized website

rip

my phone

poorly optimized website

rip

it would only be generated the first time, and possible rerolls down the line.

Also what if I’m someone poor using an extremely basic smartphone to connect to the internet?

just wait, it’s a little rough, but it’s worth it. 10 hours overnight would be reasonable. Even longer is more so if you limit CPU usage. The idea is that creating one account takes like 10 minutes, but creating 1000 would simply take too much CPU time in order to be worth the time.

At that point aren’t we basically just charging people money to post? I don’t want to pay to post.

I’d actually prefer that. Micro transactions. Would certainly limit shitposts

But that opens up a whole can of worms!

-

Will we use Hashcash? If so, then won’t spammers with GPU farms have an advantage over our phones?

-

Will we use a cryptocurrency? If so, then which one? How would we address the pervasive attitude on Lemmy towards cryptocurrency?

-

There was discussion about implementing Hashcash for Lemmy: https://github.com/LemmyNet/lemmy/issues/3204

It seems like a no-brainer for me. Limits bots and provides a small(?) income stream for the server owner.

This was linked on your page, which is quite cool: https://crypto-loot.org/captcha

It doesn’t seem like a no brainer to me… In order to generate the spam AI comments in the first place, they have to use expensive compute to run the LLM.

most of the time this “expensive” compute is just openAI

what happens when the admin gets greedy and increases the amount of work that my shitty android phone is doing

Hashcash isn’t “cryptocurrency”.

Technically not, but spammers can already pay to outsource hashing more easily than desirable users can. So if we’re relying on hashes anyways, then we might as well make it easy for desirable users to outsource too.

IMO that’s why the inventor of Hashcash just develops Bitcoin today.

I think the computation required to process the prompt they are processing is already comparable to a hashcash challenge

One time I commented that my favorite game was WoW, down voted -15 for no apparent reason.

I wouldn’t use that as evidence that you were bot-attacked. A lot of people don’t like WoW and are mad at it for disappointing them. *coughSHADOWLANDScough*

I’m shocked I had to come down this far to find this.

They’re talking about bots, but that doesn’t in any way sound abnormal. People downvote comments like that all the time for their own satisfaction.

Yeah if it was -150 or -1500 I’d be like, yeah that’s weird. But fifteen randos hating wow and its users? More likely.

doctortran? The doctortran? The real doctor? The dashing special agent with a PHD in kicking your ass? (https://www.youtube.com/watch?v=FO0kRE5OTZI)

Lemmy.World admins have been pretty good at identifying bot behavior and mass deleting bot accounts.

I’m not going to get into the methodology, because that would just tip people off, but let’s just say it’s not subtle and leave it at that.

Keep Lemmy small. Make the influence of conversation here uninteresting.

Or … bite the bullet and carry out one-time id checks via a $1 charge. Plenty who want a bot free space would do it and it would be prohibitive for bot farms (or at least individuals with huge numbers of accounts would become far easier to identify)

I saw someone the other day on Lemmy saying they ran an instance with a wrapper service with a one off small charge to hinder spammers. Don’t know how that’s going

Keep Lemmy small. Make the influence of conversation here uninteresting.

I’m doing my part!

deleted by creator

Creating a cost barrier to participation is possibly one of the better ways to deter bot activity.

Charging money to register or even post on a platform is one method. There are administrative and ethical challenges to overcome though, especially for non-commercial platforms like Lemmy.

CAPTCHA systems are another, which costs human labour to solve a puzzle before gaining access.

There had been some attempts to use proof of work based systems to combat email spam in the past, which puts a computing resource cost in place. Crypto might have poisoned the well on that one though.

All of these are still vulnerable to state level actors though, who have large pools of financial, human, and machine resources to spend on manipulation.

Maybe instead the best way to protect communities from such attacks is just to remain small and insignificant enough to not attract attention in the first place.

Keep the user base small and fragmented

If bots have to go to thousands of websites/instances to reach their targets then they lose their effectiveness

Thankfully we can federate bot posts to make that easier :P

GPT-4o

Its kind of hilarious that they’re using American APIs to do this. It would be like them buying Ukranian weapons, when they have the blueprints for them already.

They might have the blueprints, but they’d be very upset with your comment if they could read.

This is another reason why a lack of transparency with user votes is bad.

As to why it is seemingly done randomly in reddit, it is to decrease your global karma score to make you less influential and to discourage you from making new comments. You probably pissed off someone’s troll farm in what they considered an influential subreddit. It might also interest you that reddit was explicitly named as part of a Russian influence effort here: https://www.justice.gov/opa/media/1366201/dl - maybe some day we will see something similar for other obvious troll farms operating in Reddit.